Tag: cloud migration

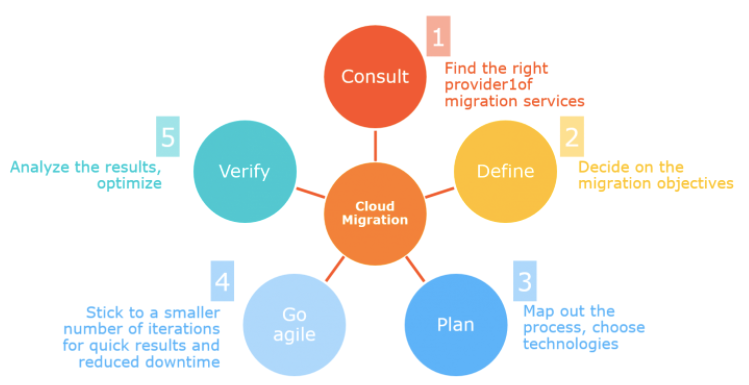

The public cloud is fast becoming the platform of choice for IT leaders and their line-of-business counterparts. While the pace of the move to on-demand IT continues to quicken, CIOs are faced with a bewildering option of providers and services. The absence of a common framework for assessing Cloud Service Providers (CSPs), combined with the fact that no two CSPs are the same, complicates the process of selecting one migration tool that is right for your organization. Majority rely on public cloud infrastructure due to a shared responsibility model such that the cloud service providers take care of the cloud itself while you focus on what’s in the cloud i.e. your data and applications. But how do you choose which public cloud provider will be helpful to your organization?

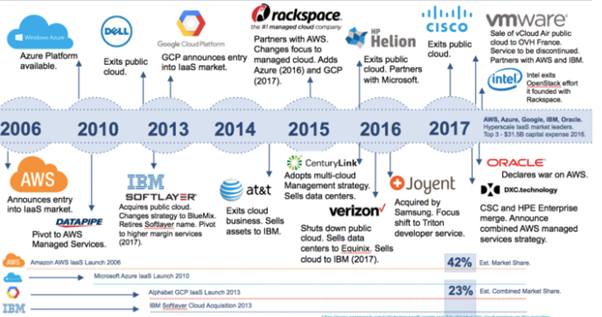

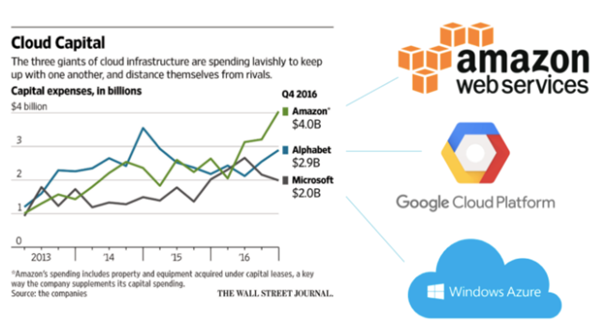

The field has a lot of competitors in it – mainly Amazon Web Services and Microsoft Azure dominate the cloud industry. AWS has been in the game the longest, capturing about 33%* of the market share with Microsoft in the 2nd position with 13% market shares. A superficial glance might lead you to believe that AWS has an unprecedented edge over Azure, but a deeper look will prove the decision isn’t that easy.

AWS has always had an unprecedented upper hand as it was first launched in 2002, whereas Microsoft did not step into the Cloud market till 2010. Azure was not very well received at first and there were many challenges as AWS had more capital, more infrastructure, and better, more scalable services than Azure did. Moreover, Amazon added more servers to its cloud infrastructure and made better use of economies of scale. This was a setback for Microsoft, but the tide soon changed. Microsoft revamped its cloud offering and added support to a variety of programming languages and operating systems. Thus, making their system more scalable and now Azure is one of the leading cloud providers in the world.

Both Azure and AWS technologies have, in their own way, contributed to the welfare of society. NASA used the AWS Platform to make its huge repository of pictures, videos, and audio files easily discoverable in one centralized location, giving people access to images of galaxies far away. The Azure IoT Suite was used to create the Weka Smart Fridge, – an implementation of the Internet of Things as a medical device to improve the storage and distribution of vaccines throughout the supply chain, in healthcare companies. This has helped non-profit medical agencies ensure that their vaccinations reach people who otherwise don’t have access to these facilities.

To determine the best cloud service provider, one needs to take multiple factors into consideration, such as cloud storage pricing, data transfer loss rate, and rates of data availability, among others. A few principal elements to consider for almost every organization while choosing the right tool to migrate are:

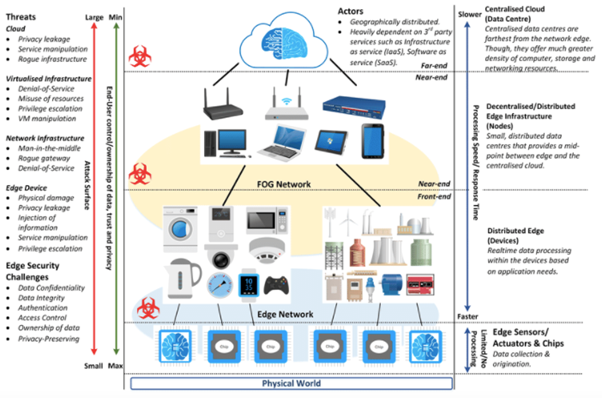

Security – Consider what security features are offered free out-of-the-box for each vendor you’re evaluating, which additional paid services are available from the providers themselves, and where you may need to supplement with third-party partners’ technology. Most tools make that process relatively simple by listing their security features, paid products, and partner integrations on the security section of their respective websites. Security is a top concern in the cloud, so it is critical to ask detailed and explicit questions that relate to your unique use cases, industry, regulatory requirements, and any other concerns you may have.

Compliance – Next make sure you choose a cloud architecture platform that can help you meet compliance standards that apply to your industry and organization. Whether you are beholden to GDPR, SOC 2, PCI DSS, HIPAA, or any other frameworks, make sure you understand what it will take to achieve compliance once your applications and data are living in a public cloud infrastructure. Be sure you understand where your responsibilities lie, and which aspects of compliance the provider will help you check off.

Architecture – When choosing a cloud provider, think about how the architecture will be incorporated into your workflows now and in the future. if your organization has already invested heavily in the Microsoft universe, it might make sense to proceed with Azure, since Microsoft gives its customers licenses and often some free credits. If your organization relies more on Amazon, then it may be best to look to them for ease of integration and consolidation. Additionally, you may want to consider cloud storage architectures when making your decision. When it comes to storage, both AWS and Azure have similar architectures and offer multiple types of storage to fit different needs, but they have different types of archival storage.

There are many motivations for evolving from an entirely on-prem infrastructure to a multiple or hybrid cloud architecture. From the very beginning of the cloud adoption process, hybrid cloud architectures allow enterprises to benefit from cloud economics and scalability without compromising data sovereignty. A multi cloud storage deployment also brings many benefits to the enterprise cloud, from avoiding vendor lock-in to accommodating mergers and acquisitions and optimizing price/performance.

Service Levels – This consideration is essential when businesses have strict needs in terms of availability, response time, capacity, and support. Cloud Service Level Agreements (Cloud SLAs) are an important element to consider when choosing a provider. It’s vital to establish a clear contractual relationship between a cloud service customer and a cloud service provider.

Costs – While it should never be the single or most important factor, there’s no denying that cost will play a big role in deciding which cloud service provider you choose. It’s helpful to look at both sticker price and associated costs. For AWS, Amazon determines price by rounding up the number of hours used. The minimum use is one hour. Azure bills customers on-demand by hour, gigabyte, or millions of executions, depending on the specific product. Serverless computing is a new cloud computing execution model in which the cloud provider runs the server, and dynamically manages the allocation of machine resources. Pricing is based on the actual amount of resources consumed by an application, rather than on pre-purchased units of capacity.

While the criteria discussed above won’t give you all the information you need, it will help you build a solid analytical framework to use when you are determining which cloud service provider you will trust with your data and applications. You can add granularity by doing a thorough analysis of your organization’s requirements to discover additional factors that will help you make an informed decision. This will be key to determining which provider will be the one that can deliver the features and resources that will best support your ongoing business, operational, security, and compliance goals.

References:

*How to choose your cloud provider: AWS, Google or Microsoft? Revived from : ThreatStack.com

“Cloud is about how you do computing, not where you do computing.”, rightly said by Paul Maritz, CEO of VMWare

The corona virus quarantine has made a lot of organisations across various industries establish remote operations and not all companies are able to handle the forced move to a virtual office. Before the impact of the Corona virus, only 62* percent of the workloads were in the Cloud but as per 87 percent of the IT decision makers, 95 percent of the workload will be in the Cloud by 2025. This acceleration was fuelled by Covid-19 acting as a catalyst for cloud migration.

The current state of remote work was largely unforeseen, no disaster recovery plan included anything for a mass outbreak of a virus. This transition to remote work on such a massive scale would not have been possible in the server-led infrastructure 15 to 20 years ago. Large enterprises can now deliver new services 30 to 60** percent faster through cloud migration. After several months into quarantine, organisations have started refining and optimising workloads into the Cloud. When and how businesses will be able to resume on-premise activities at the office remains a big question.

The cost of cloud migration was one of the major reasons for many companies to not migrate to the Cloud. But, the current circumstances have led some of the organisations around the globe to renew their efforts to get into the public cloud. It is time one stops thinking about everything being a corporate owned machine, in a corporate office, rather utilise the opportunity to focus on virtualisation of servers, storage and networks. At this crisis time, virtualisation needs to be brought to end-user devices and Mobile Device Management has to be something every company needs to think about.

Though corporate IT resources are built to offer high levels of security, quarantines mean that direct, in-person access to them is limited if not completely unavailable. Enterprises considering digital transformation prior to the pandemic might have only wanted to move up to 30-40*** percent of their existing infrastructure to the public cloud. But now, more than 70** percent of executives have indicated a belief that cloud will help them innovate faster while reducing implementation and operational risks.

Long term plans for organizations may include use of public cloud, mobile computing, and moving to 5G wireless network. This allows companies to operate anytime and anywhere, which is much easier for born-in-the-cloud companies. Large enterprises cannot move nimbly, but the circumstances have shown the need for rapid changes beyond static systems with datacentres. Organisations that embrace flexibility will be able to recover faster than their competitors.

The entire process from start to finish, requires significant changes and change management with how an organisation’s teams interact, process and share their data amongst each other. The sweeping global transition to remote work has seen virtual collaboration tools thrown into the spotlight of economic activity and their demand has sky-rocketed.

“Beyond the emergency action needed at the start of the pandemic, many organizations have turned to mitigating risk through flexibility of infrastructure”, says David Linthicum, chief cloud strategy officer with audit and consulting advisor Deloitte.

While cloud adoption offers a powerful opportunity to unlock business value, there remains a distinguishable hesitation around a few challenges of this transition. Cybersecurity is the biggest concern and remains a significant barrier when companies think of migrating to the cloud. Security threats have increased substantially during Covid-19, and organisations need to recognise and respond. Advanced cybersecurity solutions are now available which can help boost the security architecture.

Cloud computing, which has been touted for its flexibility, reliability and security, has emerged as one of the few saving graces for businesses during this pandemic. Its use is critical for companies to maintain operations, but even more critical for their ability to continue to service their customers.

Cloud adaptation provides an avenue of growth which can help balance the economic challenges faced by various organizations. Cloud budgets today account for approximately 5** percent of the average IT budgets, a figure that is likely to double by 2023.

As organisations have started adjusting to the new reality of the pandemic, cloud adoption represents a multi-billion-dollar opportunity for businesses in every region of the world. The world will eventually emerge from this period of remote work, but the way we do business will be transformed forever.

References:

*Sead Fadilpasic : Cloud migration set for major rise following pandemic, June’20. Retrieved from ITProPortal

**Luv Grimond and Alain Schneuwly : Accelerating Cloud Adoption After Pandemic, June’20. Retrieved from Jakarta Globe

***Joao-Pierre S. Ruth : Next Steps for Cloud Infrastructure Beyond the Pandemic, April’20. Retrieved from Information Week